Bias in = Bias out

My day at Women in Data Science Netherlands 2022

Embracing responsible AI, that’s how the day started. Intuitively you would say that everyone wants AI to be applied responsibly. But what is responsible AI? I guess we’re still trying to figure it out. Arlette gave some good ideas. For example, define your organization’s AI principles, make it specific how you can apply them in the different phases of a project and just talk about it.

And you don’t have to start from scratch. Philips’ AI principles were shared:

- Health and well-being

- Human oversight

- Robustness

- Fairness to avoid bias and discrimination

- Transparency

- Security

- Privacy

- Benefit customers, patients and society

The shared AI principles seem plausible. But applying them can be a challenge. For example, we all know we should do documentation. It’s part of transparency and robustness. With good documentation you can, for example, find out the pros and cons of a project. That it’s essential to know AND share the limitations came back in a couple of talks.

Responsible AI can also be linked to climate change. In many climate change initiatives, data and AI play a central role. But there is also the downside. Training models with GPUs consume a lot of energy. While we’re doing all this cool stuff, we should not forget this. How do you green your AI? What’s your AI energy label?

The theme of the event was “Bias in Data Science”. All speakers pointed to bias in their talks. What I learned from this? Although bias has a dark side, it doesn’t have to be something terrible. At least if you are aware of it.

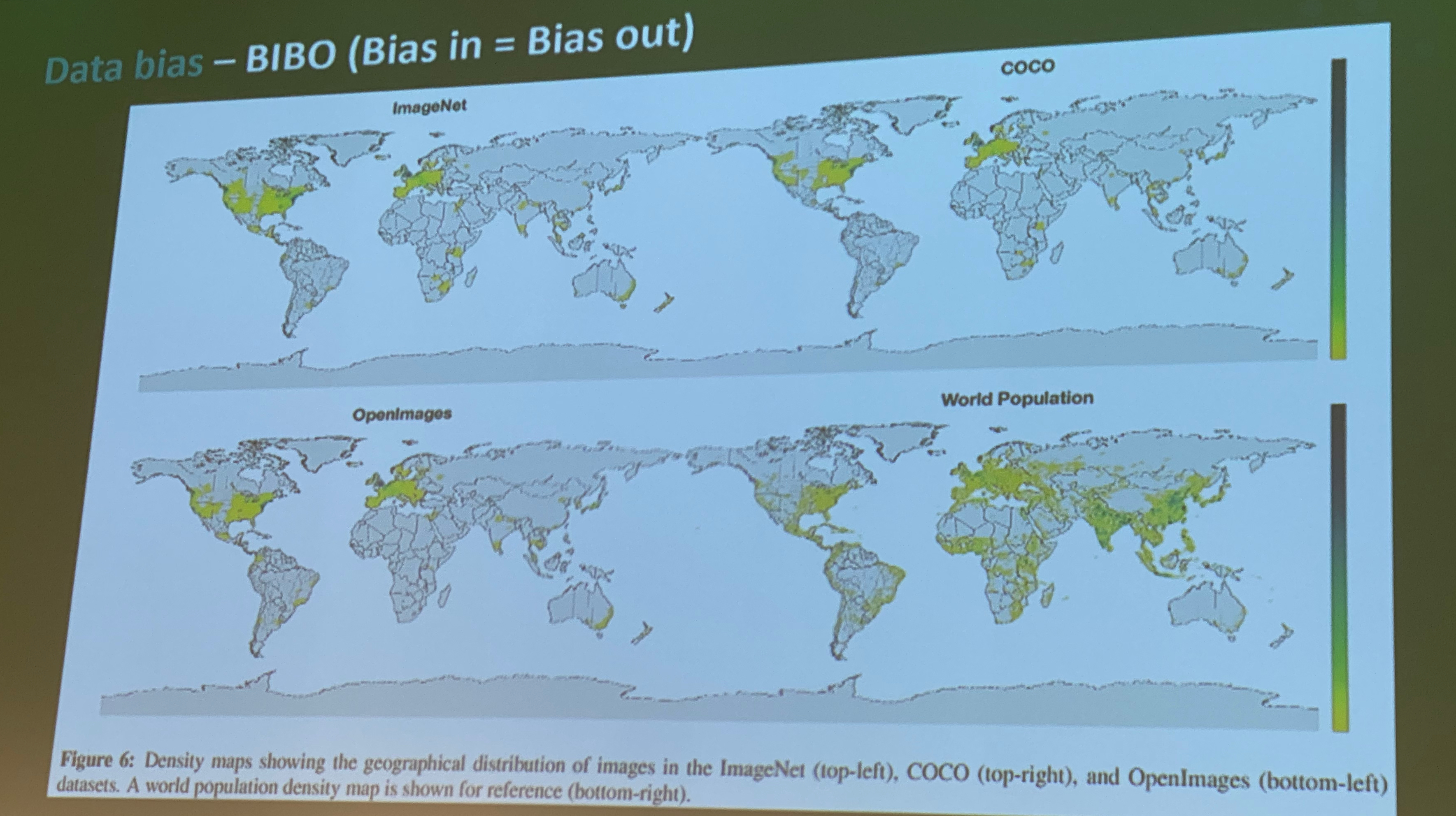

Keep in mind that if what you’re trying to model isn’t in the dataset, you can’t expect the model to “answer” it for you. See the header image of this post. The density maps show us the geographic distribution of popular open data sources for images. At the bottom right you see the world population. These datasets are used to train models. If you look closely, you see that there are almost no images available from Asia and African countries. This means that any model trained on these datasets is biased. We can’t fix this. But we must be aware of this and avoid that solutions made with biased data are not discriminating.

And there was a lot more:

- An electronic microscope is just a very expensive digital camera…of 4 meters high.

- Human refinement will be needed.

- Documentation, documentation, documentation.

- Don’t keep it to yourself. Open up and share more.

- AI incident database: https://incidentdatabase.ai/

- If it’s not in the data, it won’t end up in the model.

- Process is about the journey.

- It’s time that the legal people set the boundaries of what’s allowed.

- More and more researchers are making the data & code they create publicly available. Nice!

- Moving forward by trial and error. Make it safe to fail.

- Educate early in school. Let people grow up with technology, so they can use it to start new innovations.

A day full of sharing, gaining inspiration and meeting new people. Well done to everyone who made this day possible!

The photo is made during the keynote given by Véroniques van Vlasselaer.